With very broad support for simulating RISC‑V, Armv8-R and Armv8-A platforms, Renode is addressing the need to target scenarios of increasing complexity, including multi-core, heterogeneous embedded systems or server applications for management, security, Machine Learning acceleration and more.

The complexity and diversity of I/O and processing elements within high-performance platforms often requires multiple stages of bootloaders, hypervisors, rich operating systems and applications. Renode lets you represent complex hardware setups in necessary detail and mock optional elements, so that you can focus your efforts on whatever part (or the entirety of) your software stack as needed, from complex boot flows and hypervisor-based separation of tasks, all the way to the userspace level.

This article focuses on how the addition of virtualization capabilities to the Cortex-R52 model lets us run hypervisors and isolated guest software in Renode, using the open source Xen and HiRTOS hypervisors as examples.

Principles behind virtualization

Two-stage memory translation

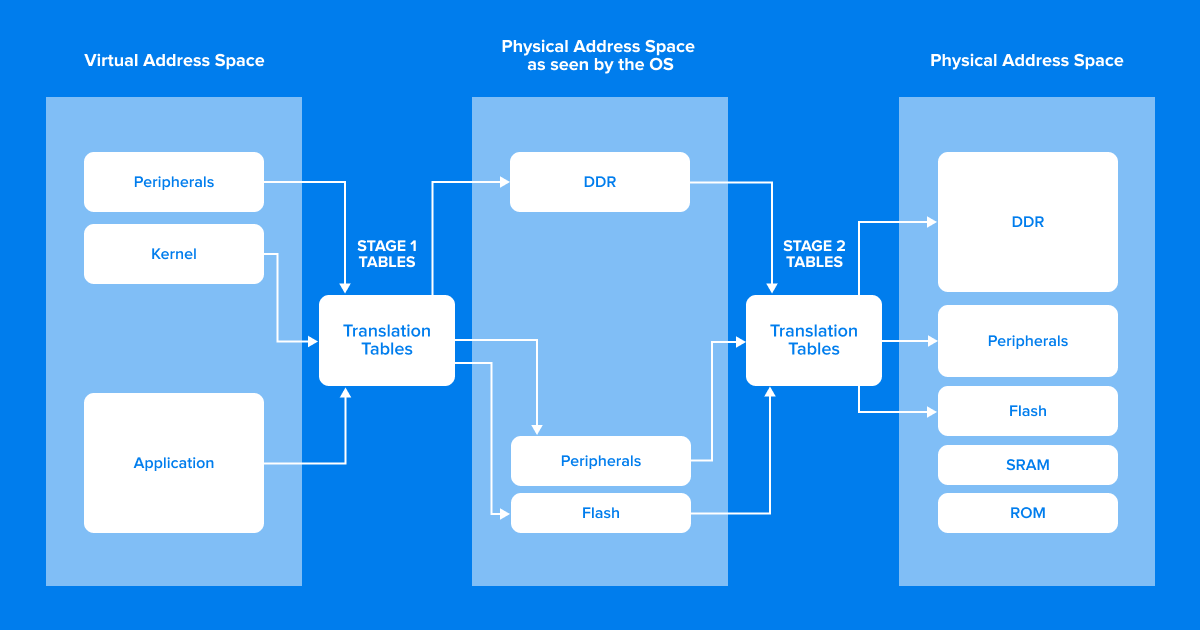

Without virtualization, memory translation usually has a single stage - a virtual address gets translated to a physical address. Virtualization ensures isolation of virtualized software by introducing a second level of translation, preventing virtual machines from accessing physical memory directly.

For systems that support MPU rather than MMU, like ones based on Cortex-R, separation can also be achieved without two stage memory translation - we can rely on memory protection to guarantee that virtualized guests have access only to the memory reserved for them. This approach requires a level of configuration on the guest side as well.

Hypervisor traps

While two-stage memory translation/protection lets us grant a VM (managed) access to physical memory, we still need to make sure the VM can use various peripherals without interfering with other guests. This is covered by hypervisor traps that intercept peripheral accesses from guests and let the hypervisor react to them as if they were memory-mapped peripherals.

Virtual interrupts

Interrupts are used by hardware to signal events to the running software. In a virtualized system, on top of the hypervisor handling those signals coming from real hardware, we also need a way to send those signals to VMs to simulate interaction with real hardware blocks. In case of giving direct access to a device we need to properly configure the interrupt controller to let these signals reach the virtualized target and be handled by the guest, and in the case of emulating a virtual device - the hypervisor needs to artificially generate the signals. All of this requires support in the CPU and in the interrupt controller itself.

HiRTOS separation kernel

HiRTOS, while not a hypervisor in the "classical sense" but rather a safety-centric RTOS written in Ada, does utilize virtualization technologies to provide greater isolation than would be otherwise possible. This mode of operation is called a separation kernel and means that HiRTOS acts as an RTOS that schedules so-called partitions instead of threads, as an RTOS typically would. A partition in this case is an isolation unit which runs a piece of software, usually another instance of HiRTOS. The design of HiRTOS relies on several assumptions: each partition has its own set of physical interrupts, there is no resource sharing nor communication between partitions and instances, and there's no hardware virtualization provided by HiRTOS (only allowing physical device passthrough).

Below you can find a screencast of a demo script with two instances of HiRTOS running on an Arm Cortex-R52 simulated in Renode:

To run the demo locally, install renode-run which lets you run commands and scripts in Renode:

pip3 install --upgrade --user git+https://github.com/antmicro/renode-runUse renode-run to install the latest nightly version of Renode:

renode-run downloadExecute the demo script in Renode:

renode-run -- -e 'i @scripts/single-node/cortex-r52-hirtos.resc'In the Renode Monitor window, execute s to start the simulation.

Xen hypervisor

Contrary to HiRTOS, Xen is a fully fledged hypervisor, purpose-built to run multiple virtual machines simultaneously, monitor them, provide virtual devices etc. Because of this, on top of what was necessary to run HiRTOS inside Renode, we needed to work on dedicated virtualization support in the CPU code as well as the Arm Generic Interrupt Controller (GIC) model, as Xen makes full use of the aforementioned virtual interrupts, hypervisor traps, and two-stage memory translation on systems with MMU.

Upstream Xen does not support Cortex-R52 CPUs, but we were able to use the project's fork created by Xilinx which adds the R52 target.

The screencast below shows a demo script with Xen acting as a hypervisor running on a simulated Arm Cortex-R52 CPU simulated in Renode:

To run this demo locally, follow the steps to install and update Renode from the previous section and execute the Xen demo script:

renode-run -- -e 'i @scripts/single-node/cortex-r52-xen-zephyr.resc'In the Renode monitor window, execute s to start the simulation.

Build secure complex systems with Antmicro

Thanks to virtualization support for Armv8-R setups, Renode lets you develop complex boot flows and rich operating systems in modern heterogeneous systems and helps you ensure they provide necessary functionality and meet security requirements of server applications and demanding industries like automotive and space.

If you are interested in using hypervisors in Renode simulation for development and testing of Armv8-R-based systems, extending virtualization support to other architectures or you would like to take advantage of Antmicro's end-to-end engineering services and open source tool portfolio to build complete, well-tested multi-node systems, let us know at contact@antmicro.com.